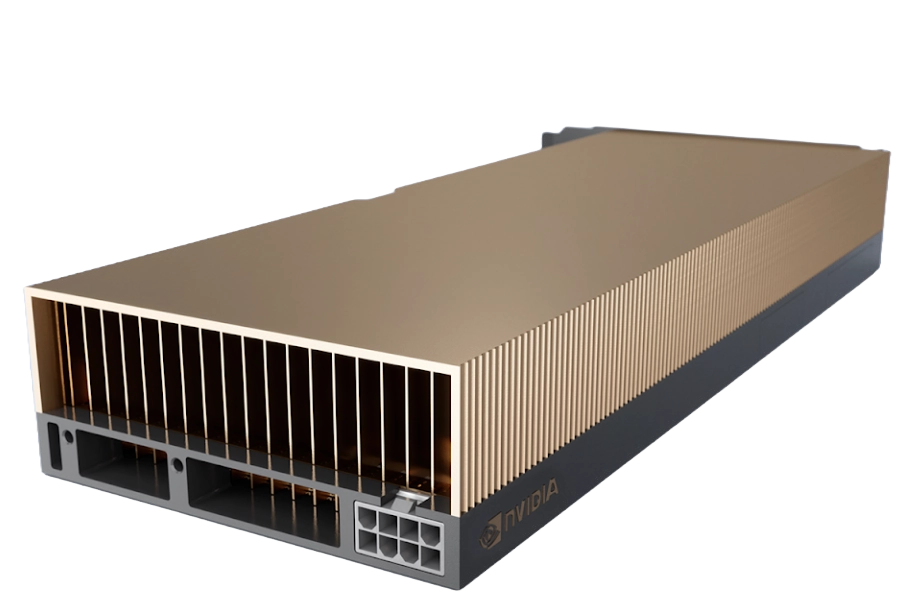

H100s from $2.25/hr. No quotas, no commitments.

TensorDock brings a global fleet of GPU servers to your fingertips for 80% less than other clouds.

Start with just $5 and launch a server in 30 seconds.

No quotas, hidden fees, or price gouging. We mean it.

Focus on building your product, not stressing over the infrastructure behind it.

Deploy a GPU

The perfect GPU for every use case and budget, from RTX 4090 to HGX H100 SXM5 80GB.

Find your niche among our 45 available GPU models.

Take advantage of our consumer GPUs from $0.12/hr for

up to 5x inference value.

Switch now

Root access and dedicated GPUs. Get full OS control,

manage your own drivers and never run into compatibility

issues again. Plus, Windows 10 support.

Multithreaded and optimized end-to-end for uncompromising

VM deployment speed. Docker included on all VM templates.

See for yourself

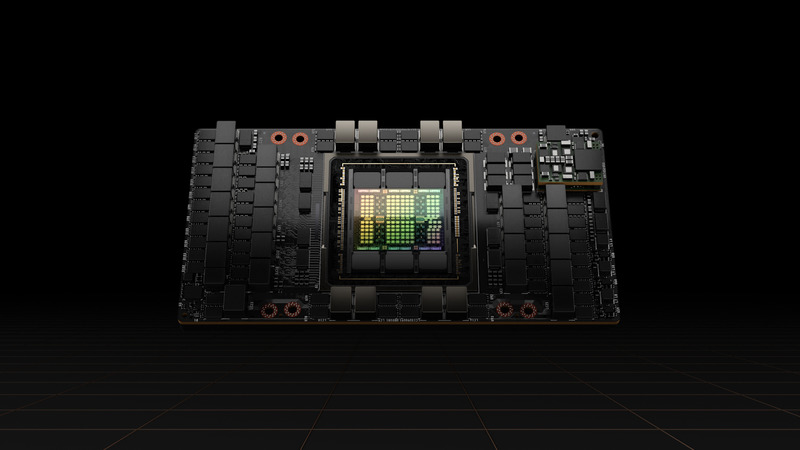

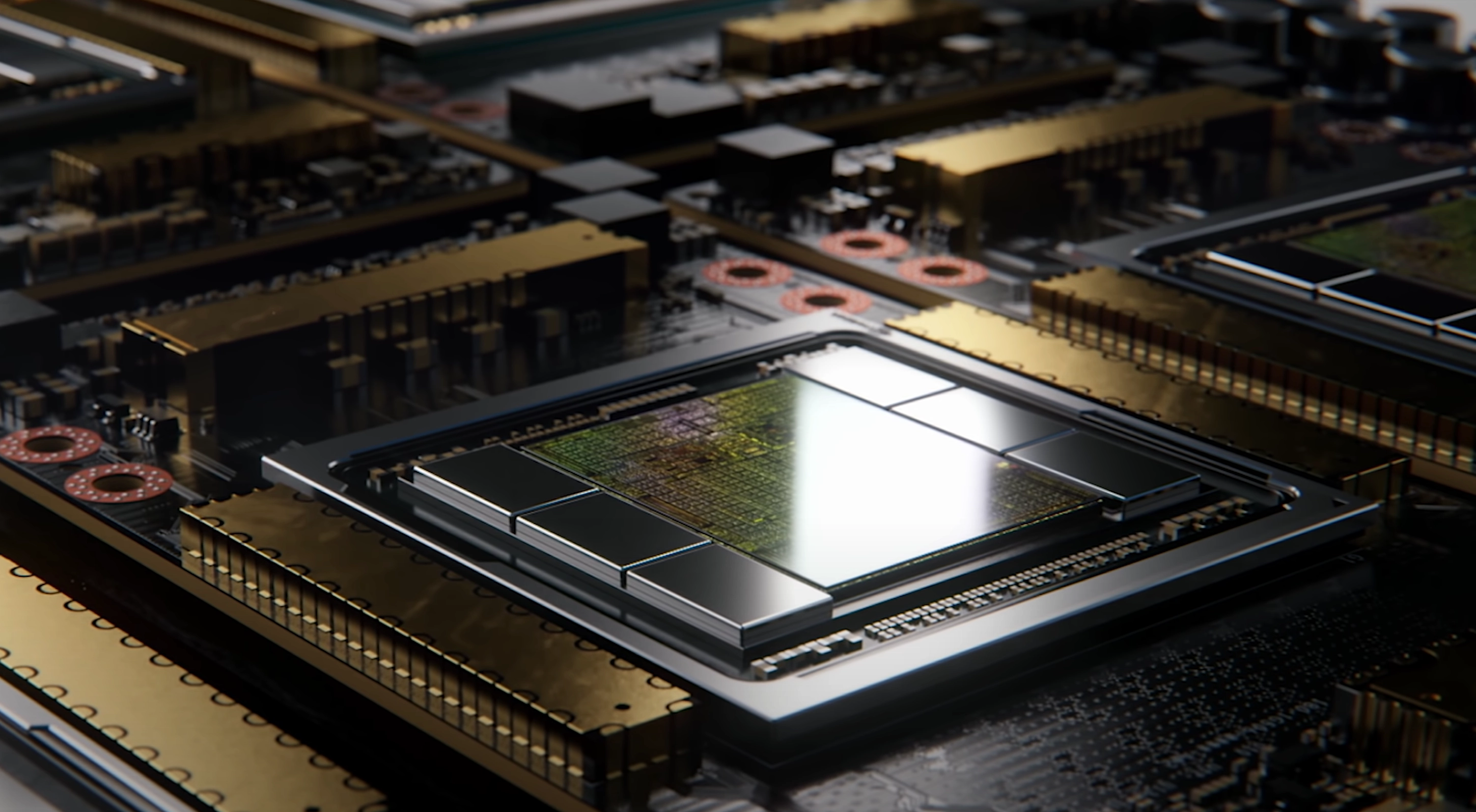

Enterprise-grade servers hosted in tier 3/4 data centers

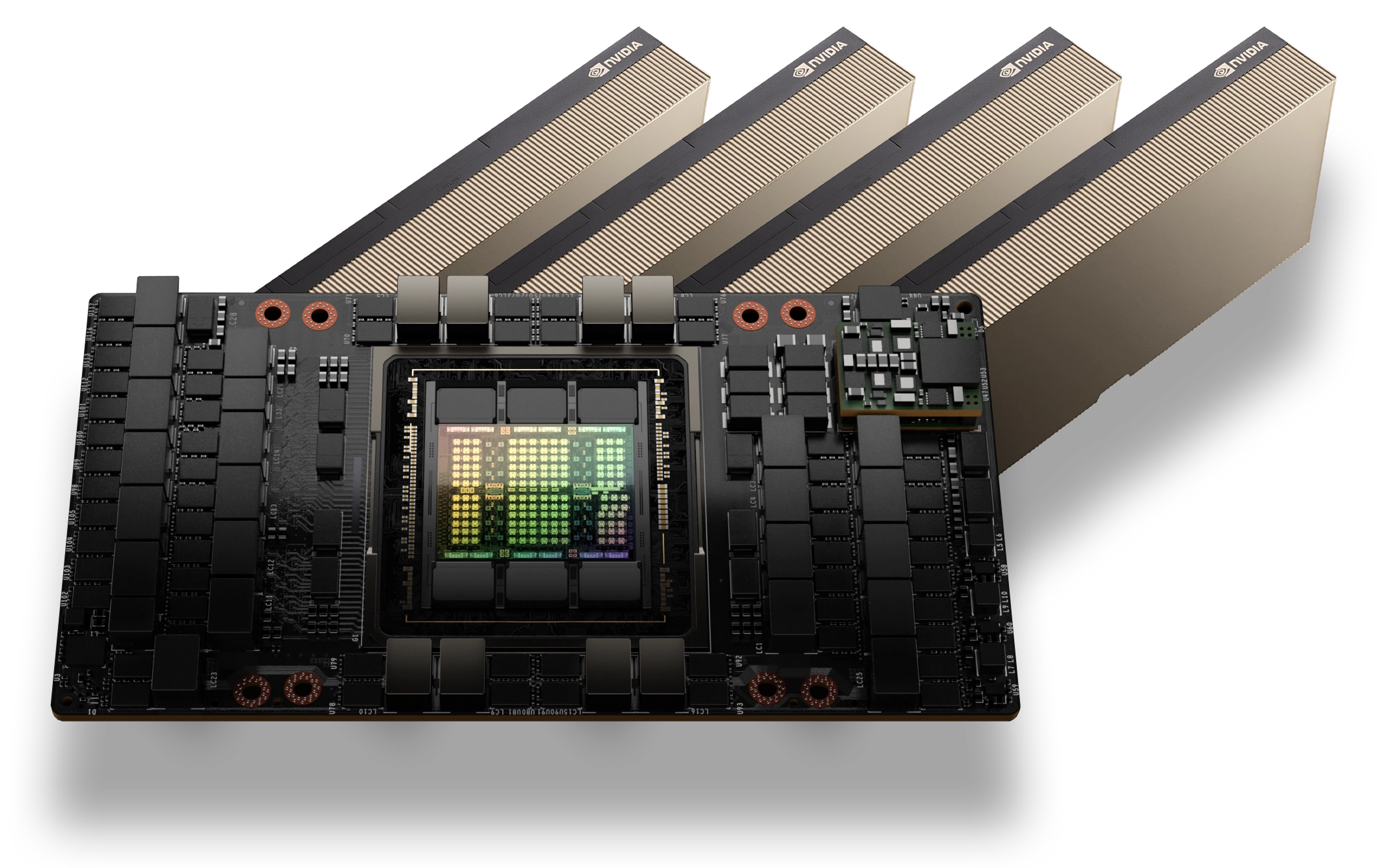

For builders needing training and inference with no compromises.

Deploy an H100 SXM

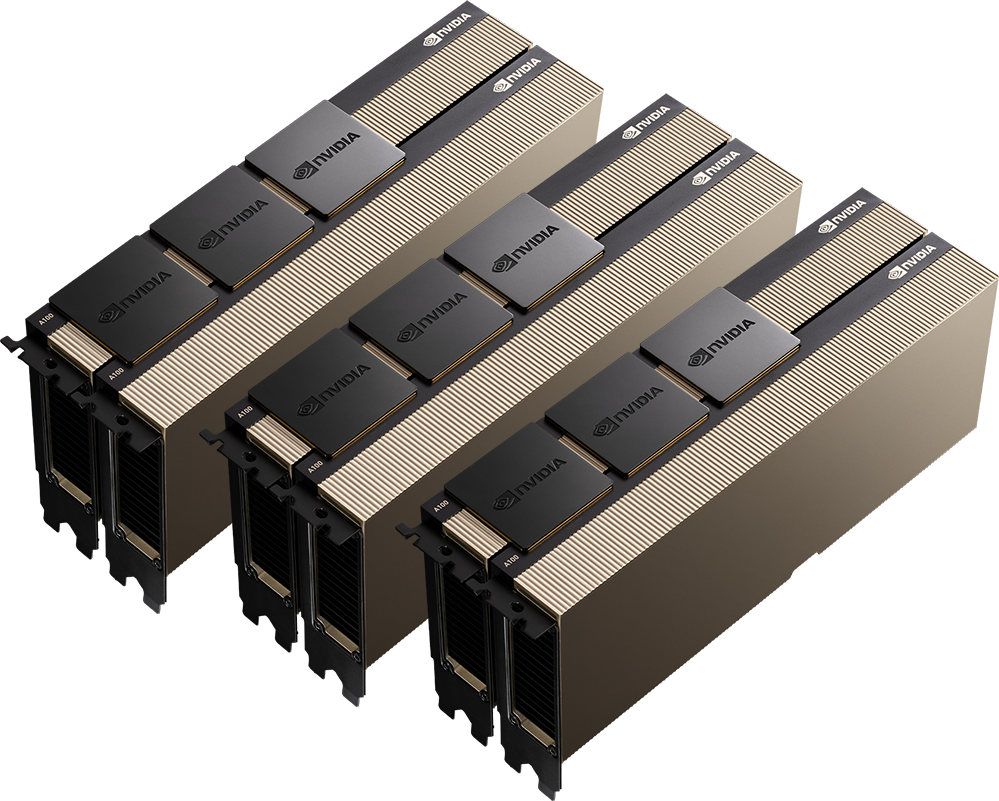

The best balance between price and performance for AI inference.

Deploy an A100 SXM

Truly unbeatable value for gaming, image processing, and rendering.

Deploy a RTX 4090The hyperscaler experience without the hyperscaler price.

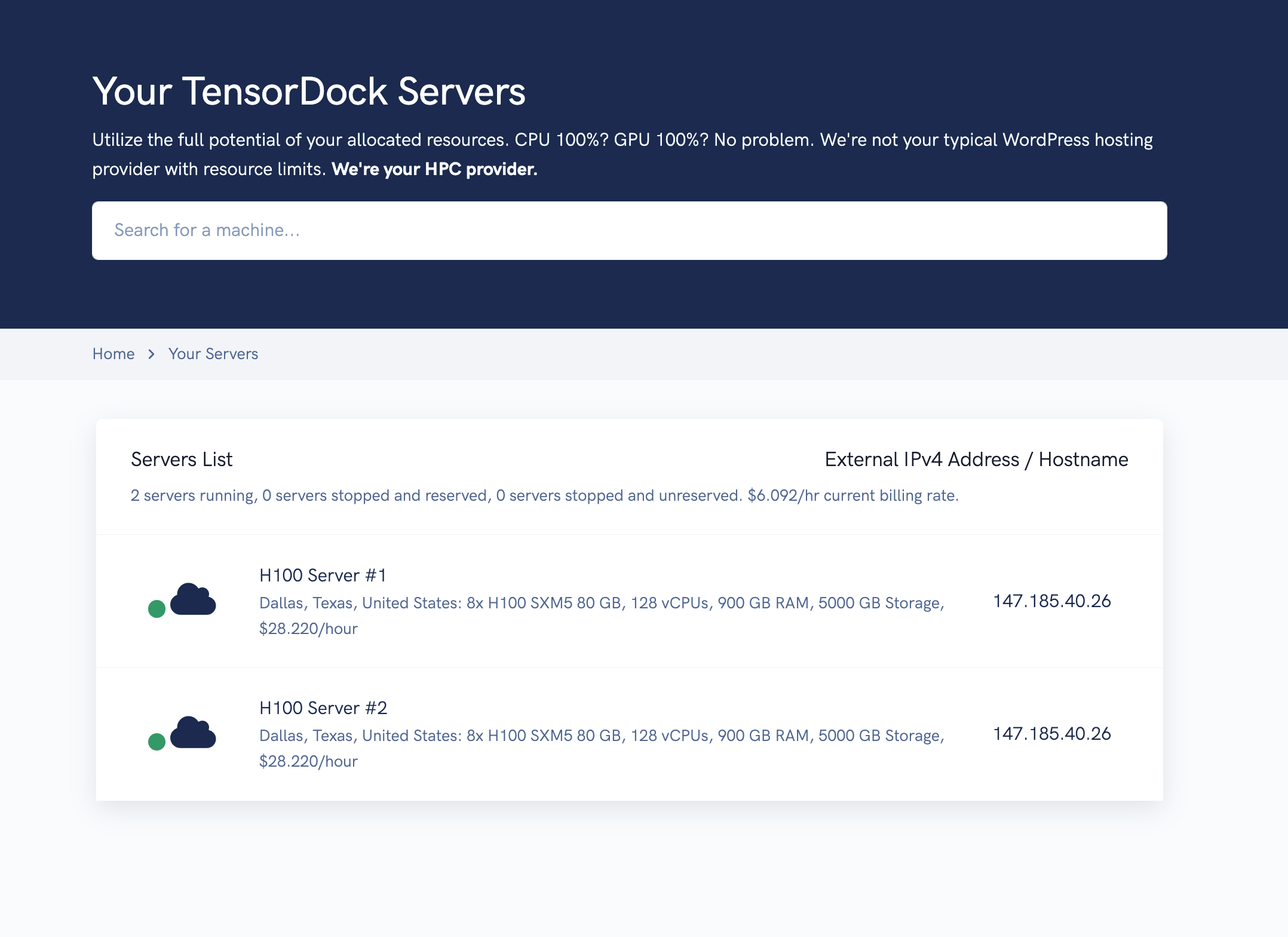

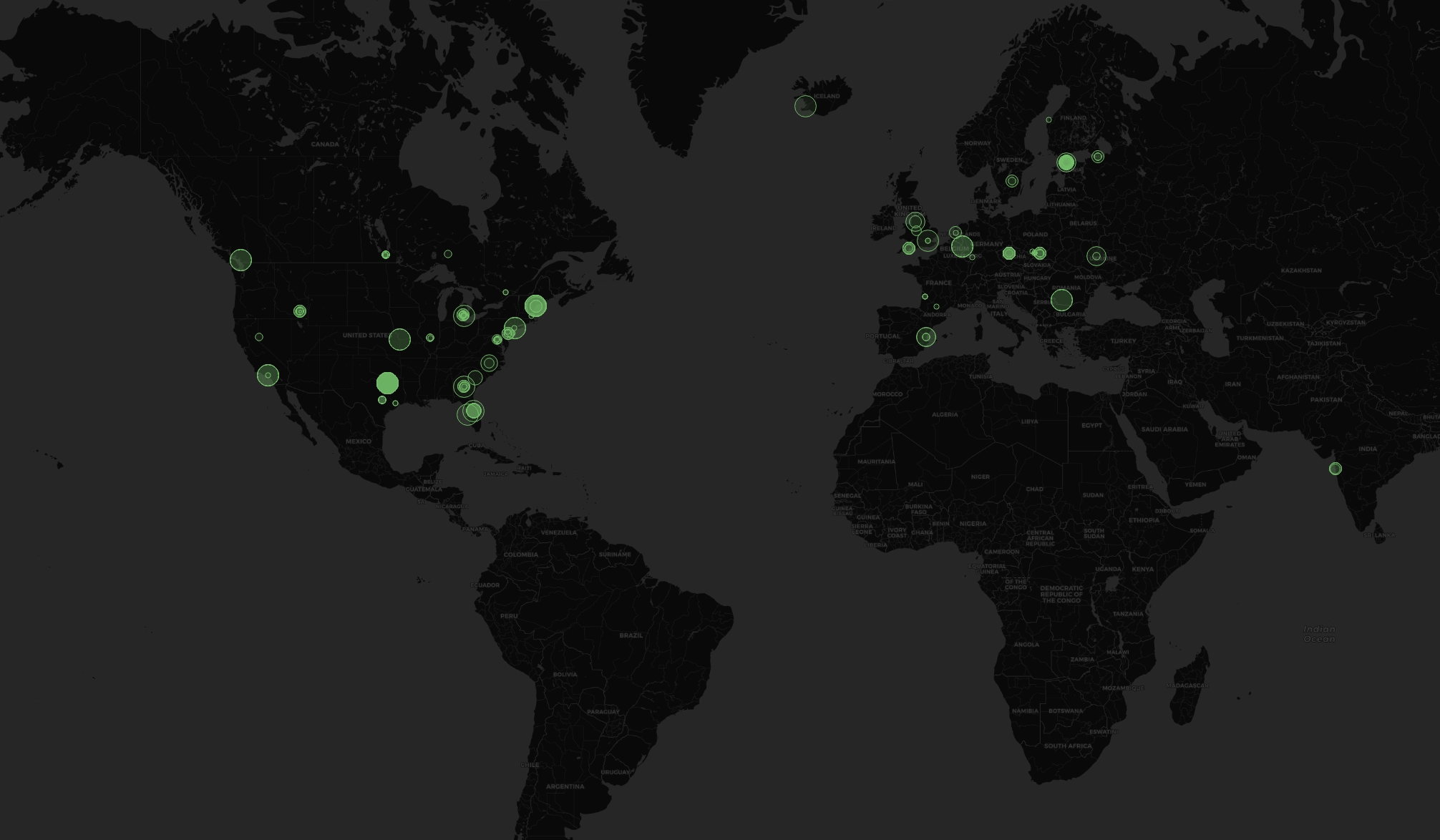

Up to 30,000 GPUs available through our close partners.

Hundreds ready to deploy now on our dashboard,

distributed across 100+ locations in over 20 countries.

We're the last cloud you'll ever need. Reach

your customers where they are.

Scale now

Well-documented, well-maintained, well-everything.

Built from scratch, with metadata, availability, and everything you need

to manage your servers on TensorDock.

Try it out

All hosts are vetted by TensorDock for quality

hardware, technical knowledge, and communication skills,

before their GPUs are listed.

TensorDock holds hosts to a 99.99% uptime standard and

requires maintenance to be scheduled at least two weeks in advance.

Hosts who don't meet continue to meet our standards are removed

from our platform.

Learn more

Servers for any niche, for up to 80% less than other clouds. All available on demand.

AI workloads requiring shell access

Our globally distributed GPU fleet. 99.99% uptime standard and no ingress/egress fees.

Deploy a GPUPre-configured, deploys in seconds

Multithreaded stack optimized end-to-end for uncompromising VM deployment speed.

Deploy an Instant VMTranscoding and batch processing

The latest Xeon and EPYC CPUs, available from $0.012/hr in secure, multihomed data centers.

Deploy a CPULet's chat! If you don't see what you need, we can work it out. We'll get back to you within 24 hours.

Book a meeting Message us

Most hardware on our platform is now hosted in certified data centers.

But as an additional layer of security, we revoke SSH access from

our hosts so they cannot access customer data without our knowledge.

TensorDock restricts hostnode

access to only those who need it. We have an agent monitoring

hostnodes for logins and suspicious activity, from any source.

Learn more.

We operate on a pay as you go model. Users deposit funds, and we deduct balance continuously after a server is deployed. When your balance reaches $0, your servers are automatically deleted. If you need to rent servers long-term, reach out to discuss our reserved pricing.

We're a marketplace of independent hosts who compete and set

their own pricing. As the customer, this ensures you

always have access to the market's best pricing.

Additionally, hosts have different locations and redundancy,

leading to varying costs. Our marketplace offers you options to

pay based on your preferences.

airgpu relies on TensorDock's API to deploy Windows virtual machines for cloud gamers. TensorDock's abundant GPU stock allows airgpu to scale during weekend peaks without worrying about compute availability.

ELBO uses TensorDock's reliable and secure GPU cloud to generate art. TensorDock's highly cost-effective servers run their workloads faster for less than the big clouds.

Professor Skyler Liang from Florida State University researches GAN networks with TensorDock GPUs. TensorDock's superior economics allow researchers to do more with their limited university budgets.

Creavite combines TensorDock's Windows VMs with Adobe software to render logo animations. TensorDock's CPU-only instances allow Creavite to fully integrate their workflows and stay on one cloud.

We connect customers to the best available compute. Cutting-edge hardware in Tier 3/4 Data Centers, for maximum security and reliability. Converted mining rigs, for maximum price-to-performance. We've got both, for whatever suits your needs best.

Our marketplace approach guarantees the best deal in the industry. Get the availability and location distribution of a hyperscaler, without the quotas or nonsense.

Available

In stock

Through partners