From computer vision to image generation, the NVIDIA 4090

Tensor Core GPU

accelerates the most demanding visual workloads

Up to 60% faster PyTorch performance than the 3090

Up to 80% improvement on FP16 training throughout over the 3090

Clusters continuously coming online through Q1 2024.

Reserve access today, or

deploy one now from $0.37/hr.

512x512, stable-diffusion-v1-5, 50 steps, WebUI

The 4090 has fast, GDDR6X memory for ML workloads

On TensorDock's cloud platform. See below for more pricing details

The NVIDIA 4090 is based on NVIDIA's Ada Lovelace GPU architecture.

It's a

powerhouse in the realm of visual computing GPUs, catering

to demanding applications in gaming, data analytics, and

computer vision.

The 4090 is fast. Featuring 512 fourth-generation Tensor Cores

and

16,384 CUDA Cores, it provides significant

improvements in

performance for AI and machine learning applications compared

to the 3090

Apart from pure performance, its massive 24 GB of VRAM and

over 1 TB/s of memory bandwidth

make it a strong value for tasks that require large amounts of fast memory.

The 4090

boasts advanced features such as DLSS,

which can provide data augmentation for more efficient cloud gaming and rendering sacrificing quality.

Overall, the NVIDIA 4090 represents a significant leap forward

in GPU technology, offering strong performance and

efficiency for the most demanding computing tasks.

See full data sheet.

512x512, stable-diffusion-v1-5, 50 steps, WebUI

CUDA cores are the basic processing units of NVIDIA GPUs. The more CUDA cores, the better.

Tensor cores are specialized processing units that are designed to efficiently execute matrix operations, used in deep learning.

With sparsity.

The more VRAM, the more data a GPU can store at once. The 4090 24GB has 1,006 GB/s of memory bandwidth.

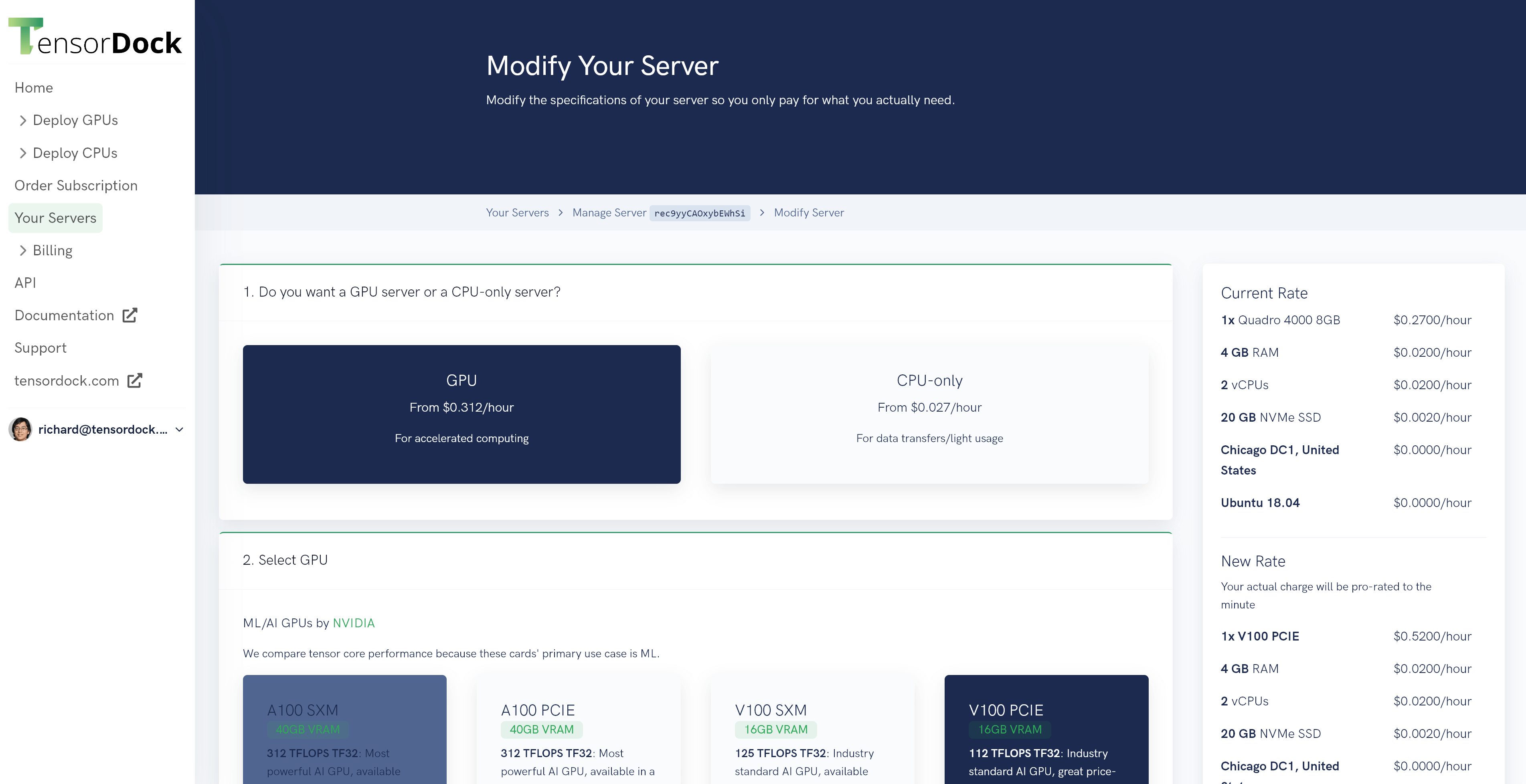

Unleash unparalleled computing power for on the industry's most cost-effective cloud

Our hostnodes come with

beefy resources,

ensuring that your work is never bottlenecked by the hardware

resources.

We have a select number of

hostnodes that we offer on-demand.

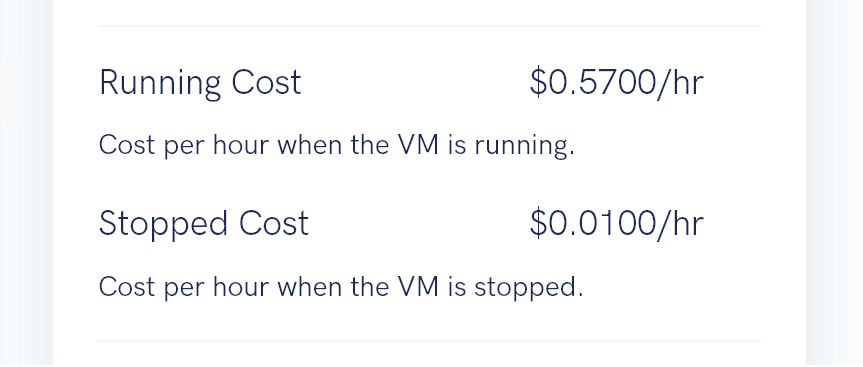

You can deploy 1-8 GPU 4090 virtual

machines fully on-demand starting at just $0.37/hour depending on

CPU/RAM resources allocated, or $0.20/hour if deployed as a spot

instance. We are seeing high demand, so it is difficult to snag a

multi-GPU 4090 VM at this time.

As such, we higly

recommending contacting

us to reserve an entire 8x hostnode from an upcoming batch.

We built our own hypervisor, our own load balancers, and our

own

orchestration engine — all so that we can deliver the best

performance.

VMs in 10 seconds, not 10 minutes. Instant stock validation.

Resource webhooks/callbacks. À la carte resource allocation

and

resizing.

For on-demand servers, when you stop and unreserve your GPUs,

you are billed a lower rate for storage. You can always

request

an export of your VM's disk image.

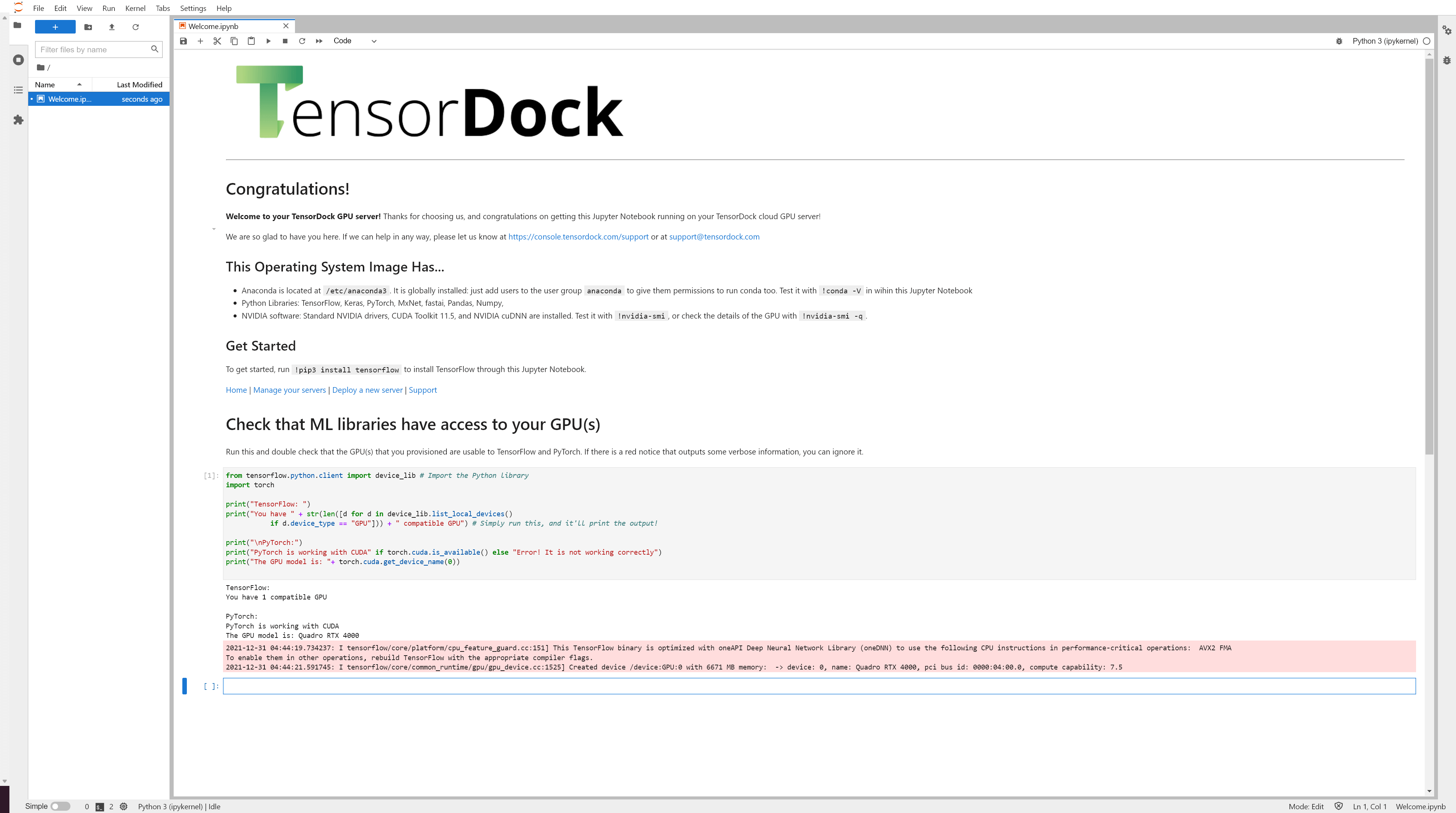

Deploy our machine learning image and get Jupyter Notebook/Lab out of the box. Slash your development setup times.

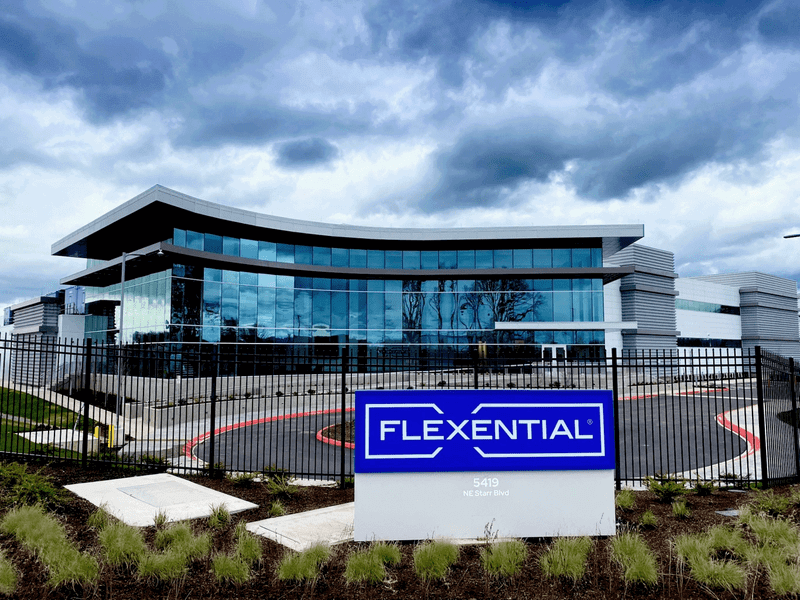

We have one primary 4090 data center, along with many other hosts across the globe.

Ask about each data center's compliance certifications. Many are SSAE-18 SOC2 Type II, CJIS, HIPAA, & PCI-DSS certified, so you can trust that your most mission-critical workloads are in a safe place.

Hi! We're TensorDock, and we're building a radically more

efficient cloud.

Five years ago, we started hosting GPU servers in two basements

because we couldn't find a cloud suitable for our own AI

projects. Soon, we couldn't keep up with demand, so we built a

partner network to source supply.

Today, we operate a global GPU cloud with 27 GPU types located

in dozens of cities. Some are owned by us, and some are

owned by partners, but all are managed by us.

In addition to GPUs, we also offer CPU-only servers.

We speak in tokens and iterations; in IB and TLC/MLC, and

we're

excited to serve you.

GPUs

vCPUs

GB RAM

... all deployed within the past 24 hours

The 4090 is available for deployment at multiple multihomed

tier

1-3 data centers. Each is protected by 24/7 security,

powered

via redundant feeds, and backed a power and network

SLA.

Experience sub-20 ms latencies to key population centers

along

the US west coast for low-latency LLM

inference

traffic.

Every layer of our infrastructure is protected by a

variety

of security measures, ensuring privacy and security for

our

customers.

Read more about our security.

We're thrilled to offer bare-metal virtualization for

customers looking to rent full 8x configurations for a

long

period of time.

For on-demand customers, we offer

KVM

virtualization with root access and a dedicated

GPU passed through. You get to use

the full compute power of your GPU without resource

contention.

For our on-demand platform, we operate on a pre-paid model: you deposit money and then provision a server. Once your balance nears $0, the server is automatically deleted. For these 4090s, we are also earmarking a portion to be billed via long term contracts.

Go ahead — go build the future of tomorrow — on TensorDock. Cloud-based machine learning and rendering has never been easier and cheaper.

Deploy a GPU Server