Autoscaling GPU containers for your inference workloads.

Build

your API

endpoint, not the infrastructure to run it on.

Build your container from scratch, or use one of our preconfigured templates. All the container needs to do is provide an HTTP endpoint.

Configure your container's CPU, RAM, and disk resources. Rank GPUs by priority, and set the minimum and maximum number of replicas.

We'll handle the rest — autoscaling, load balancing, and redundancy. You'll be ready to send API requests to your new endpoint in minutes.

TensorDock's managed GPU containers are the easiest way to deploy a scalable API endpoint for any use case, and it's available at no additional charge on top of the cost of the compute resources.

LLM inference

Image endpoints

Transcoding APIs

Data analytics

Our managed container platform lets you deploy container groups atop any of our massive fleets of GPUs and autoscale with ease.

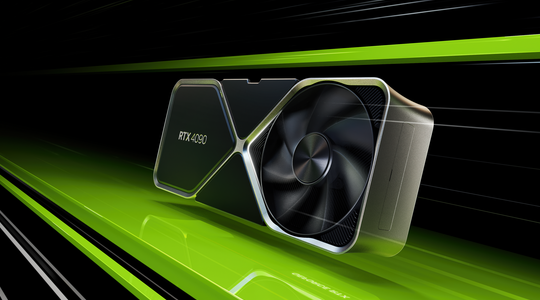

Uncompromising performance for image and video processing, gaming, and rendering.

Deploy a 4090 container

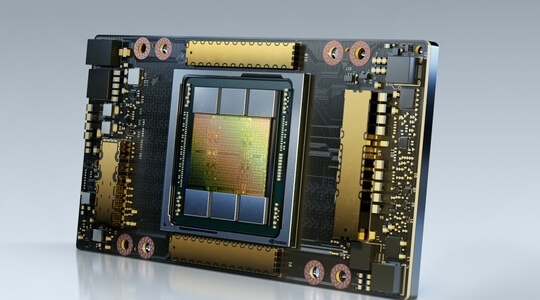

Accelerated machine learning LLM inference with 80GB of GPU memory.

Deploy an A100 container

24 GPU models available, choose the one that best suits your workload.

Customize a container

Need help deciding which GPU type to utilize for your

container group, or need a custom white glove solution? Chat

with our sales team.

Schedule a video chat

Send us an email or message

When you spend more than $10,000/month on TensorDock:

A point of contact to meet your needs.

Even faster response times.

Chat with our team in real time.

We'll automatically add a replica if the average GPU utilization over a 10-minute period surpasses 80%, and we'll delete a replica if the average GPU utilization over a 10-minute period is below 20%.

We use a round-robin load balancer to distribute requests evenly across replicas. We try to keep replicas within the same general geographic region but on different physical servers for best-in-class latency and redundancy

We operate on a prepaid model: you deposit money and then provision compute. You must manually add more funds, we do not automatically charge your card.

You can either provide a Docker image from a public registry (e.g. Docker Hub) or a private registry, which you'll need to provide authentication credentials for. We recommend using a private registry for security reasons.

At the moment, we only accept 3D Secure credit card payments via Stripe. We can manually accept cryptocurrency payments on deposits larger than $1,000 on request after you've completed a KYC check.

Yes, for a 5% payment processing fee. If you're unsure about whether we're the platform for you, you can start off with just $5 to test out our services.