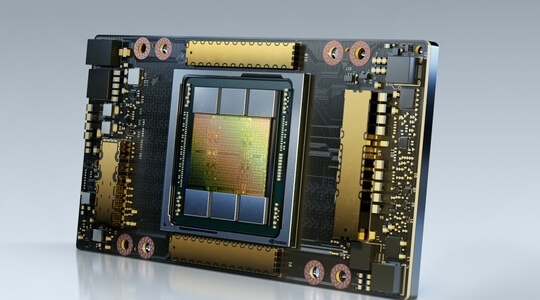

About the NVIDIA A100 GPU

The NVIDIA A100 is based on NVIDIA's Ampere GPU architecture.

It's a

powerhouse in the realm of GPUs, catering

to demanding applications in AI, data analytics, and

high-performance computing.

The A100 is fast. Its 432 third-generation Tensor Cores

and

6912 CUDA Cores enable it to providing significant

improvements in

performance for AI and machine learning applications compared

to previous generation GPUs

Apart from pure performance, its massive 80 GB of VRAM and

2 TB/s of memory bandwidth

make it ideal for large-scale LLM, data analytics, and

scientific

computing tasks that require large amounts of fast memory.

The A100 also

boasts advanced features like Multi-Instance GPU (MIG),

enabling it to

efficiently serve multiple workloads simultaneously — up to 7

10GiB VRAM instances per physical GPU.

Overall, the NVIDIA A100 represents a significant leap forward

in GPU technology, offering strong performance and

efficiency for the most demanding computing tasks.

See full data sheet.